The challenge: dealing with data deluge

Tackling some of the biggest data challenges in the world of physics and astronomy

The ODISSEE project is addressing some of the most significant data challenges in physics and astronomy. With the future commissioning of the High-Luminosity Large Hadron Collider (HL-LHC) and the Square Kilometre Array Observatory (SKAO), the community of physicists will have to deal with vast amounts of data, that current technical technologies can’t process.

To cope with this challenge, the ODISSEE project aims to revolutionise the way we process, analyse and store data. We are developing new technologies using AI to process and filter only the relevant data into the data stream on the fly. This approach will enable scientists to build more complex, yet reliable, physical models, whether at micro or astronomical scale.

At the same time, the project also focuses on redesigning hardware and software solutions that cover the entire data stream continuum, from generation to analysis. The primary objective is to create tools that are energy efficient, adaptable and flexible for the future. This will involve the development of a reconfigurable network of diverse processing elements driven by AI.

The project in details

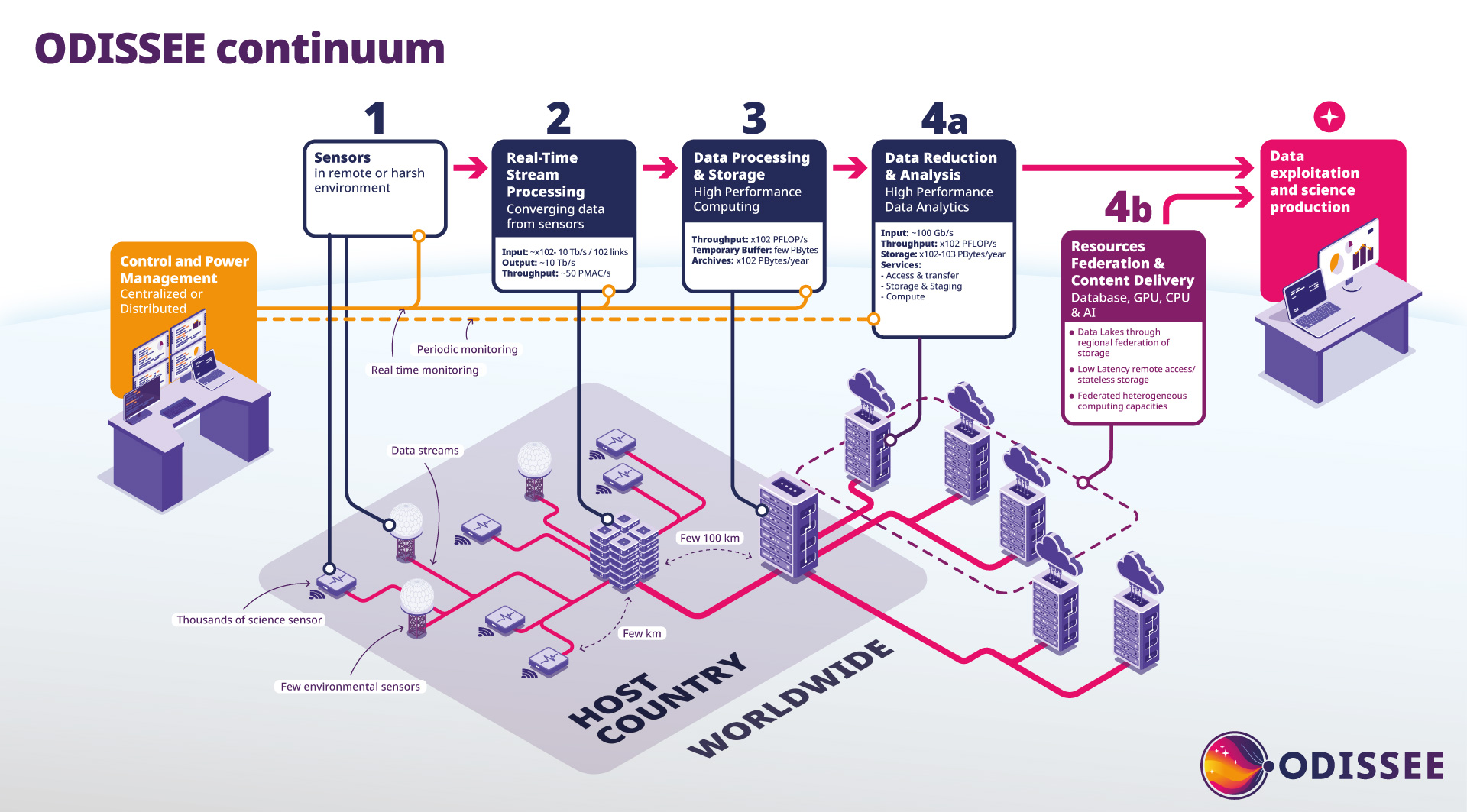

The ODISSEE approach covers the entire digital continuum needs for both the HL-LHC and SKAO projects, from data collection to analysis.

1

Data collection

The initial process starts with a collection of distributed science sensors, such as antennas or particle detectors, which gather raw data. These sensors are complemented by monitoring and environmental sensors to ensure accurate calibration and facility maintenance.

2

Real-Time Stream Processing

This second stage involves collecting, filtering, and converging raw data streams in real-time. This step is crucial for handling the immense data throughput without any loss in quality. It occurs locally, near the science sensors.

3

Data Processing and Storage

The third phase focuses on processing and reducing the incoming data streams into science data products. This is achieved using state-of-the-art High-Performance Computing (HPC) facilities, which must meet demanding specifications for data ingestion, computation, and storage.

4

Data Reduction and Analysis

The final stage is designed to deliver actionable data products and provide the means to process and analyze them. This is supported by a network of data centers, both locally and internationally, ensuring efficient data distribution and analysis.

News and events

Follow us

ODISSEE Webinar: how to shape trust in the age of data deluge and AI-driven processing?

Join us to learn about the future of online data processing in the realm of giant research infrastructures ! In this 45-minutes webinar we will...

Launch of EU-funded project ODISSEE

The ODISSEE project, funded by the European Union, aims to develop innovative technologies and methodologies to process the unprecedented volume of...